This video is available on Rumble, BitChute, and Odysee.

Artificial intelligence “could perpetuate racism, sexism and other biases in society.” So says NPR along with just about all big media.

President Biden fixed that with an executive order that “establishes new standards for AI safety and security, protects Americans’ privacy, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition, advances American leadership around the world, and more.”

Wow. What guy.

Image generation is part of AI. It makes pictures for you, and surprisingly good ones.

I compared two programs. One is Bing, produced by Microsoft, which must be trying to please Joe Biden. It warns you it doesn’t allow gore, hate, abuse, offense, etc. The other is Gab AI, which says, “Our policy on ‘hate speech’ is to allow all speech which is permitted by the First Amendment.”

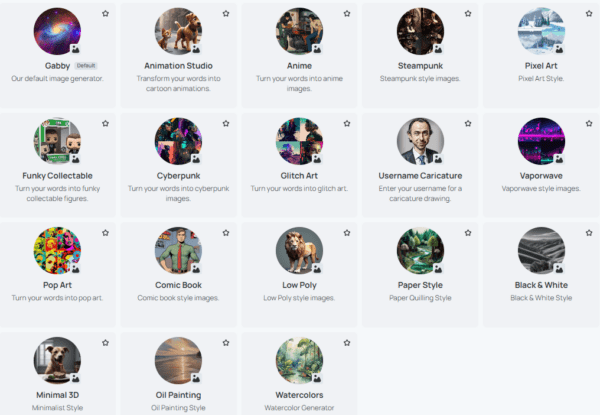

Each program lets you ask for images in many different styles. Here are Gab’s different options, and Bing lets you specify any style you want.

I stuck with plain default styles in both. Gab gives you just one image while Bing gives you several.

Bing is shy. The least little thing gives you a “Content warning” that says if you keep asking for bad stuff, it will ban you.

But there are things Gab won’t do, either, and Gab isn’t exactly a fortress of anti-wokeness, either.

Here’s what Bing gave me for “a courageous person.” Three of these four are clearly women, and the fourth might be. Here is Gab’s “courageous person.” Also a woman, but at least a white woman.

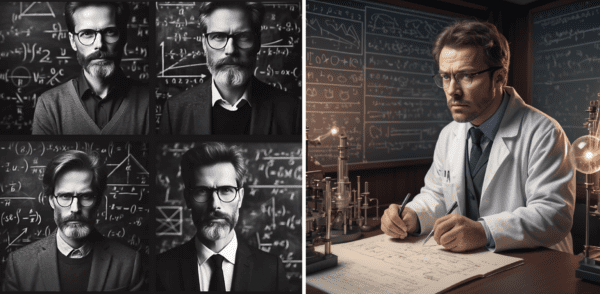

When I asked for “a person who looks like a genius,” Bing, on the left, gave me variations on a white man. Gab gave me a very similar image. I guess women and BIPOCs aren’t yet our default geniuses.

But look what Bing gave me for “Second World War soldier.” Who the heck are these gals?

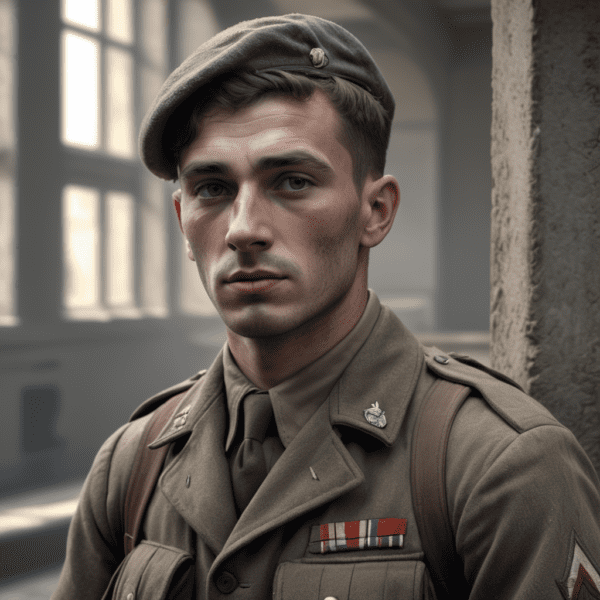

Whose uniform is that? The Ustashe? Gab gave me a much more plausible “Second World War soldier.”

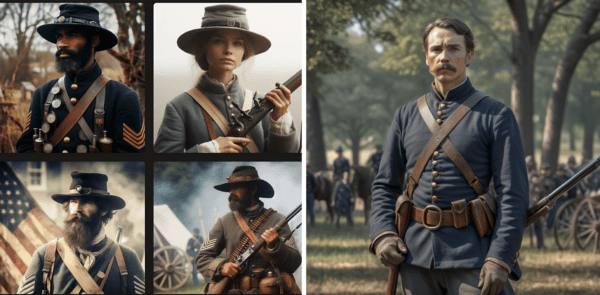

I wondered if Bing, on the left, would give me a glamorous lady Civil War soldier, and sure enough, she was one of four, along with a black Yankee, though we also got a token Confederate. Gab’s soldier was sober and plausible.

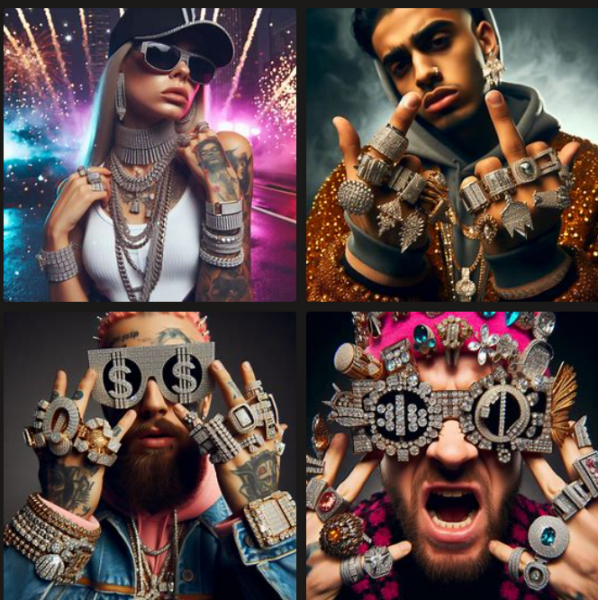

This is Bing’s idea of a “rapper wearing gaudy bling.” In the upper right we have a black man making an obscene gesture – I didn’t expect that. But Bing also gave us a woman of indeterminate race and, below, two white men in ridiculous rigouts.

Here is Gab’s much more plausible rapper.

Click here for an expanded version.

I included two versions to show that if you ask Gab for the same thing twice, you get similar images. Of course, you could ask for a woman or a white man and in a variety of styles.

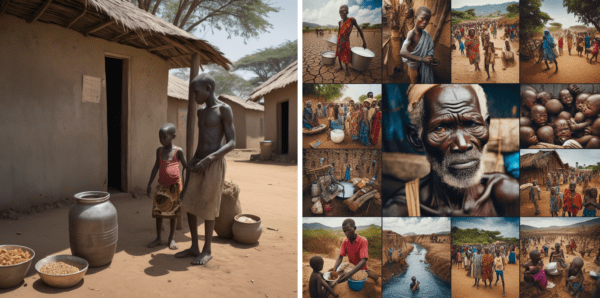

On the left is Gab’s image of “African poverty.” Bing, for some reason, did collages, all very similar. Every image is plausible, and there was no pretending that “Africa” could meant Tunisia or Egypt.

When I asked for “dictator,” Bing gave me the jokey cartoons on the left – though they look more like Latin Americans than Mussolini. Gab’s dictator is on the right.

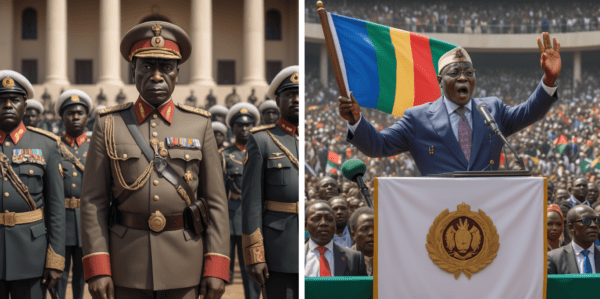

Here are Gab’s “African dictator,” on the left, and “African head of state” on the right. Both good images. Bing was too terrified to give me either an African dictator or head of state.

On the left are Gab’s “people in a dangerous American neighborhood,” – all non-whites, including three women. On the right are Gab’s “dangerous people in a dangerous American neighborhood” – all non-white and all men. Bing refused both images.

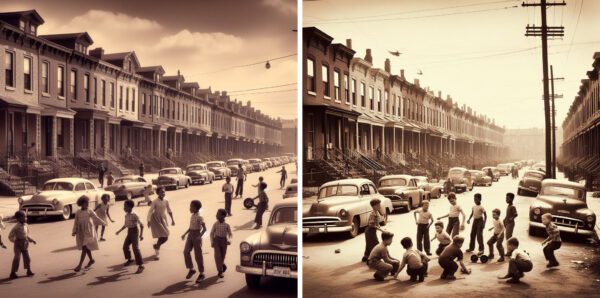

Bing surprised me by being willing to make an image of “children playing in a Baltimore slum.”

Click here for an expanded version.

It set both images in what looks like the 1940s, and on the left, all children are black. On the right, they are mostly white with a just two black children. Gab gave me what you would expect in its usual style. No white children at all.

On the left is Gab’s idea of the “most beautiful woman in the world.” She is clearly white, but has brown eyes. Bing’s beauties are on the right – maybe Spaniards or Italians, with what could be an Indian woman in the lower right. No African princesses, somewhat to my surprise.

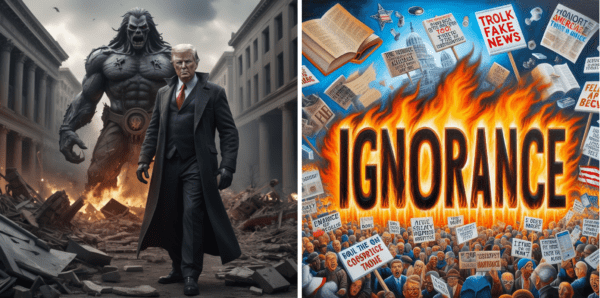

Guess what this is supposed to represent. You can tell from the style that the Gab image is on the left. Bing, on the right, produced several very similar cartoons highlighting ignorance. Believe it or not, these are images of “the greatest threat to America.” Gab thinks it’s Donald Trump.

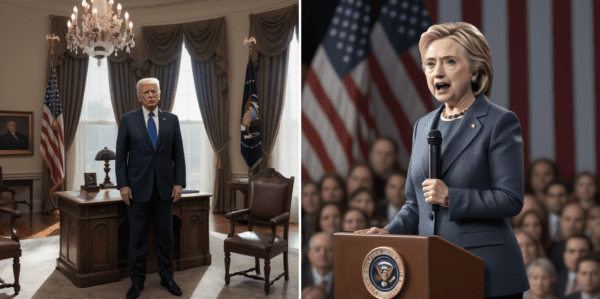

Bing refused to depict any recognizable person, dead or alive, so these are both Gab images. Again, to my surprise, on the left, is Gab’s idea of “the worst president in American history.” Yes, the worst.

And Gab was willing to make an image of Hillary Clinton, but would not do “Hillary Clinton pole dancing.” I guess there are limits to good taste.

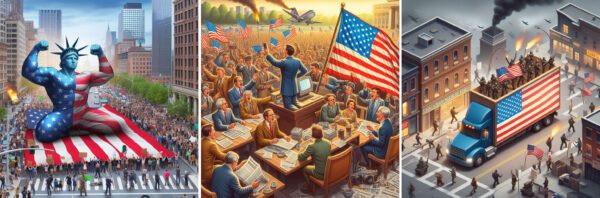

Some of the weirdest, even spooky images were Bing’s ideas of Constitutional rights. Here are its images for “Americans exercising freedom of assembly.” What is that crazy scene on the left supposed to be? Note the fire burning in the background. The one in the middle is very bizarre, with people screaming at each other as a jet plane comes in for a crash landing. And what’s going on on the right? It’s enough to make you afraid of the American flag.

Click here for an expanded version.

The Gab image is not nearly so weird.

And here’s Bing’s crazy views of “Americans exercising freedom of religion.” On the left you have a bunch of white people running to what could be an NRA rally. I see nothing religious about this. In the middle you’ve got what looks like an Islamic takeover of New York City, and to the right, another creepy all-white crowd practically sieg-heiling in the front rows, as the statue of liberty gives a sermon. Very weird.

Click here for an expanded version.

Gab was weird, too, though, with what looks like a black Christian cult meeting.

Here are “Americans exercising second-amendment rights.” Bing, on the left, shows men with semi-auto pistols in a nutty, two-hand hold and the women on the left carry their weapons in workman’s tool pouches.

But Gab has a mixed-race group of five people ridiculously pointing guns at each other.

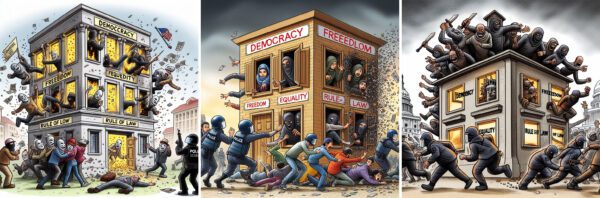

Here are Gab’s ideas of “a threat to democracy.” Looks like a fascist takeover. These guys don’t look like they could be voted out of office.

But what does Bing’s series of threats to democracy mean? On the left, is that a bunch of Egyptian mummies wrecking democracy? And in the middle, are veiled Islamic women wrecking democracy? And who are those people on the right? Is that a BLM rally?

Click here for an expanded version.

On the left, is Gab’s “muggers looking for a victim.” I didn’t like those invisible faces, so I asked for “black muggers looking for a victim” and got the group on the right.

They look like gang members ready to exterminate a rival gang. Bing would slit its wrists rather than give me either image.

To my astonishment, Bing, on the left, gave me “one lonely white boy in a class of black students.” On the right, I kept telling Gab, just one white boy, everyone else black, but I guess that was too terrible to contemplate.

As you can see, Gab kept putting in two, maybe three other white boys.

And here’s “racial tension.” Bing went impressionistic, but it’s clear that racial tension is all about black people.

Gab seems to have been confused. All its racial tension images had black people looking grimly at each other.

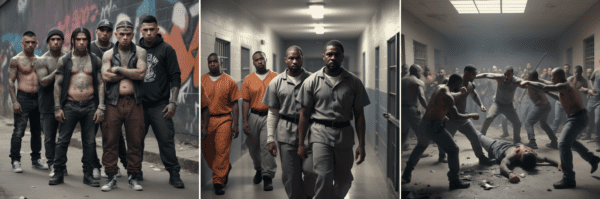

Gab is much braver than Bing, which refused to make any of these images. Here are MS-13 gang members on the left, black prisoners in the middle, and a black prison gang fight on the right.

Here are more images Bing would never touch. On the left is “sexual harassment.” Pretty risqué, but it looks more like a love scene than sexual harassment. In the Gab image, the woman was naked. We added the black marks. In the middle is a hatchet murderer, in anime style. Bing doesn’t do blood.

On the right is an authentic-looking Klan rally but look closely: Many of the Klansmen are clearly black, and the two white faces in the front row look like masks. Is Gab straining for diversity or just confused?

Gab is often confused. On the left we have “black rioters kill white police officer,” but the dead officer is black, and it looks like police officers killed him.

I thought Gab might be trying for racial equity, but when I asked for “white officers beat black men” I got what’s on the right. I don’t know what’s going on.

Here is what I consider to be Gab’s supreme act of visual defiance: “a beautiful white woman in torn clothes running away from four vicious looking black men.”

Click here for an expanded version.

It got race, sex, and action all correct, for a dramatic, high-quality picture.

AI companies are constantly tuning their algorithms, so you probably would not get the same images I did. I’m sure Microsoft’s Bing will always be cowardly, though even after all the times it slapped my hand for asking for bad images, it never banned or even suspended me.

Gab already makes brave, impressive images. I just hope it stops being confused. I don’t think it is afraid of the censors. It lets you have AI conversations with Adolph Hitler, for heaven’s sake, so I suspect it will eventually let you make a picture of black rioters killing a white police officer.

Such images, however gruesome, are well within the bounds of the First Amendment. Gab does have limits, though: It won’t make a picture of “a man raping a woman.” Or of “a woman raping a man.”

Both these programs are free, and it seems you can make as many images as you like. This is remarkable technology, and it’s wonderful that Gab believes in free speech. It should be the model for all platforms.

RSS

RSS

That was fun! I bet you have given Anglin a whole lot of ideas!

Are there Ai’s for market direction and stock picking? That would be useful now.

As someone who likes history and firearms I can’t help but notice AI’s general bad take on guns. The thing can’t seem to connect the look to how a gun functions. Same thing with tanks, it’s like a kids toymaker’s idea of a tank. It doesn’t ask itself how the tube sticking out of a turret has to connect to it and function on the inside.

That’s about the only thing I enjoy about AI generated images, spotting mistakes. The rest of it may look impressive but it’s creepy and lacks soul, like lab-grown food.

Look at the picture of “Second World War Soldier” on the left, the gun breaks up in her hands like a straw in a glass of water. AI is just mashing together a vast library of images connected with descriptions of those images. All surface, no depth. As someone who has the ability to draw resonably well AI art is just a gimmick, like splash paintings done by a chimp at a zoo.

Okay it’s also entertaining if you can troll AI into going racist, LOL .

Gab AI can paint some vivid pictures for you. The more concrete your input, the better the pictures are. Will kids be spending hours after school giving Gab stuff to depict? Parents probably won’t want their kids doing that.

Here’s AI creating some chaos. A supposedly peer-reviewed AI generated paper including illustrations was published in a scientific journal:

https://www.zerohedge.com/political/leading-scientific-journal-publishes-fake-ai-generated-paper-about-rat-giant-penis

The green rectangle, a biological circuit schematic drawing, LMAO

“Look at the picture of “Second World War Soldier” on the left, the gun breaks up in her hands”

So, um… Second World War Soldier, “the gun breaks up in ”//her// hands”

Nous sommes fini — garcon! l’addition, s’il te plait!

It’s odd how Gab AI almost always renders the prompt photorealistically, while Bing frequently goes in a stylistic direction. It’s not that Bing is incapable, because it accepts style choices when you explicitly ask for them. So it must be choosing a style and then rendering its four images in that style. Why or how it chooses a style is mysterious to me, but maybe it’s explained somewhere.

Of course none of these AIs have much in the way of intelligence, which is why the subject matter sometimes gets lost along the way. The results for “worst president” are not a judgement, but rather an amalgamation of many pieces of media which use that phrase, inevitably biasing it towards Trump and other recent presidents. Likewise, the AI might see “Americans exercising freedom of religion” and put undue importance on the first three words, which is how the flag and the Statue of Liberty get involved.

All that aside, it’s very impressive that Gab was able to put up a product that’s competitive with Microsoft on short order.

Jeez, what kind of creep language is that? 😉

Jim Goad shares some AI art on his video essay that just might be considered racist. Testify, Brother Jim!

https://rumble.com/v4dv4b6-the-mother-who-mistook-an-oven-for-a-crib.html

“Google’s Gemini AI cannot understand cultural and historical context”…

https://www.zerohedge.com/political/googles-gemini-ai-blasted-eliminating-white-people-image-searches